Description This repo contains GGUF format model files for Meta Llama 2s Llama 2 70B Chat. Llama 2 70B Orca 200k - GGUF Model creator. Import transformers model_id meta-llamaLlama-2-70b-chat-hf device fcudacudacurrent_device if. Description This repo contains GGUF format model files for Upstages Llama 2 70B Instruct v2. LLaMA 2 is available in three sizes 7 billion 13 billion and 70 billion parameters depending on the. If not provided we use TheBlokeLlama-2-7B-chat-GGML and llama-2-7b-chatggmlv3q4_0bin as. Llama 2 encompasses a range of generative text models both pretrained and fine-tuned with sizes from 7. For those interested in leveraging the groundbreaking 70B LLama2 GPTQ TheBloke made this possible..

Https Huggingface Co Thebloke Llama 2 70b Gptq

Llama 2 13B - GGUF Model creator Description This repo contains GGUF format model files for Metas Llama 2 13B About GGUF GGUF is a new. Description This repo contains GGUF format model files for Microsofts Orca 2 13B These files were quantised using hardware kindly provided by Massed Compute About GGUF GGUF is a new. Lets look at the files inside of TheBlokeLlama-213B-chat-GGML repo We can see 14 different GGML models corresponding to different types of quantization. Run Code Llama 13B GGUF Model on CPU Welcome to this tutorial on using the GGUF format with the 13b Code Llama model all on a CPU machine and. Head over to ollamaaidownload and download the Ollama CLI for MacOS Install the 13B Llama 2 Model Open a terminal window and run the following..

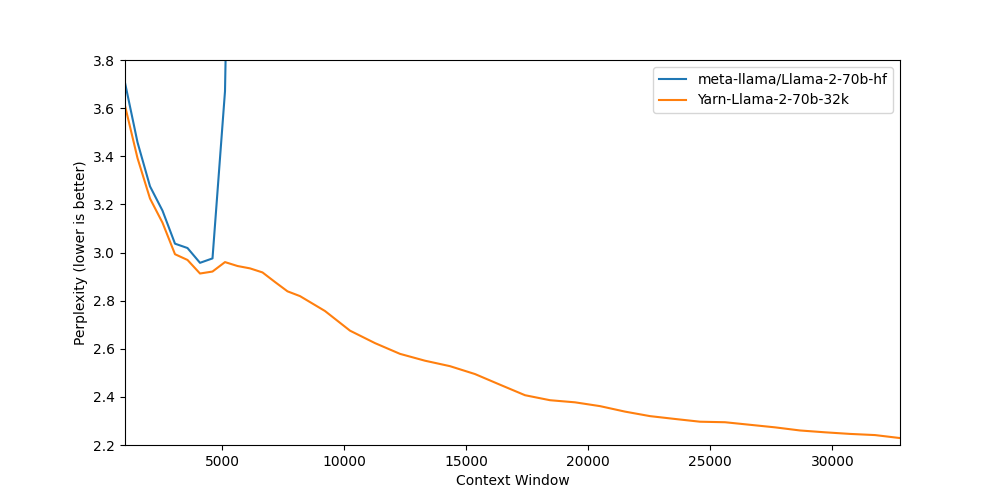

Description This repo contains GPTQ model files for Meta Llama 2s Llama 2 70B. Llama 2 70B Instruct v2 Description This repo contains GPTQ model files for. The size of Llama 2 70B fp16 is around 130GB so no you cant run Llama 2 70B fp16 with 2 x 24GB. The GPTQ links for LLaMA-2 are in the wiki. In the section labeled Download custom model or LoRA input TheBlokeLlama-2-70B-chat-GPTQ. This is an implementation of the TheBlokeLlama-2-70b-Chat. Click this runpod template link 1 Select a suitable GPU VRAM needed depends on which model size. Controversial Old QA Add a Comment Sabin_Stargem 6 mo Ago Hopefully the L2-70b GGML is an 16k edition with..

Description This repo contains GGUF format model files for Meta Llama 2s Llama 2 70B Chat. Llama 2 70B Orca 200k - GGUF Model creator. Import transformers model_id meta-llamaLlama-2-70b-chat-hf device fcudacudacurrent_device if. Description This repo contains GGUF format model files for Upstages Llama 2 70B Instruct v2. LLaMA 2 is available in three sizes 7 billion 13 billion and 70 billion parameters depending on the. If not provided we use TheBlokeLlama-2-7B-chat-GGML and llama-2-7b-chatggmlv3q4_0bin as. Llama 2 encompasses a range of generative text models both pretrained and fine-tuned with sizes from 7. For those interested in leveraging the groundbreaking 70B LLama2 GPTQ TheBloke made this possible..

Comments